Detection Timing vs. Prediction Accuracy: The KPI No One Tracks

In predictive maintenance conversations, one question shows up almost immediately:

“How accurate is it?”

Accuracy feels objective. It feels technical. It feels like the right way to evaluate a solution. It is also the metric most likely to mislead reliability teams.

An alert can be mathematically correct and still be operationally useless. If it arrives after access is constrained, throughput is already impacted, or a line is already down, accuracy no longer matters. The system may have succeeded analytically while failing operationally.

Outcomes are not determined by how right a prediction is. They are determined by when the signal shows up. Detection timing, not prediction accuracy, is the KPI that actually changes what teams can do.

Learn more here:

The Impact of Predictive Maintenance on Industrial Performance

This peer-reviewed article documents how predictive maintenance — by analyzing real-time data and trends — anticipates potential failures and helps plan maintenance before breakdowns occur, which aligns with your argument that timeliness of insight trumps accuracy alone in reducing losses and improving reliability.

The “X Days Before Failure” Trap

Many maintenance tools are marketed around a simple promise: detect failure X days before it happens.

That framing is appealing because it sounds precise. It is also fundamentally flawed.

Failures do not progress on a single, universal timeline. This is why condition-based monitoring outperforms time-based prediction claims in real-world operations. There is no consistent countdown clock that applies across assets, environments, or operating conditions. Treating degradation as a uniform march toward failure creates false confidence and encourages the wrong questions.

Instead of asking whether a system can predict failure days in advance, teams should be asking something else entirely:

Does this signal arrive early enough to intervene safely and deliberately?

If the answer is no, the number of “days before failure” is irrelevant. Timing of signal matters more than just accurate failure labeling after the fact.

Failure Modes Do Not Surface on the Same Clock

Different assets expose degradation in very different ways.

Some failure modes provide long lead times. Others compress rapidly once conditions change. Evaluating all of them through a single timing metric obscures risk instead of reducing it.

Consider common industrial assets:

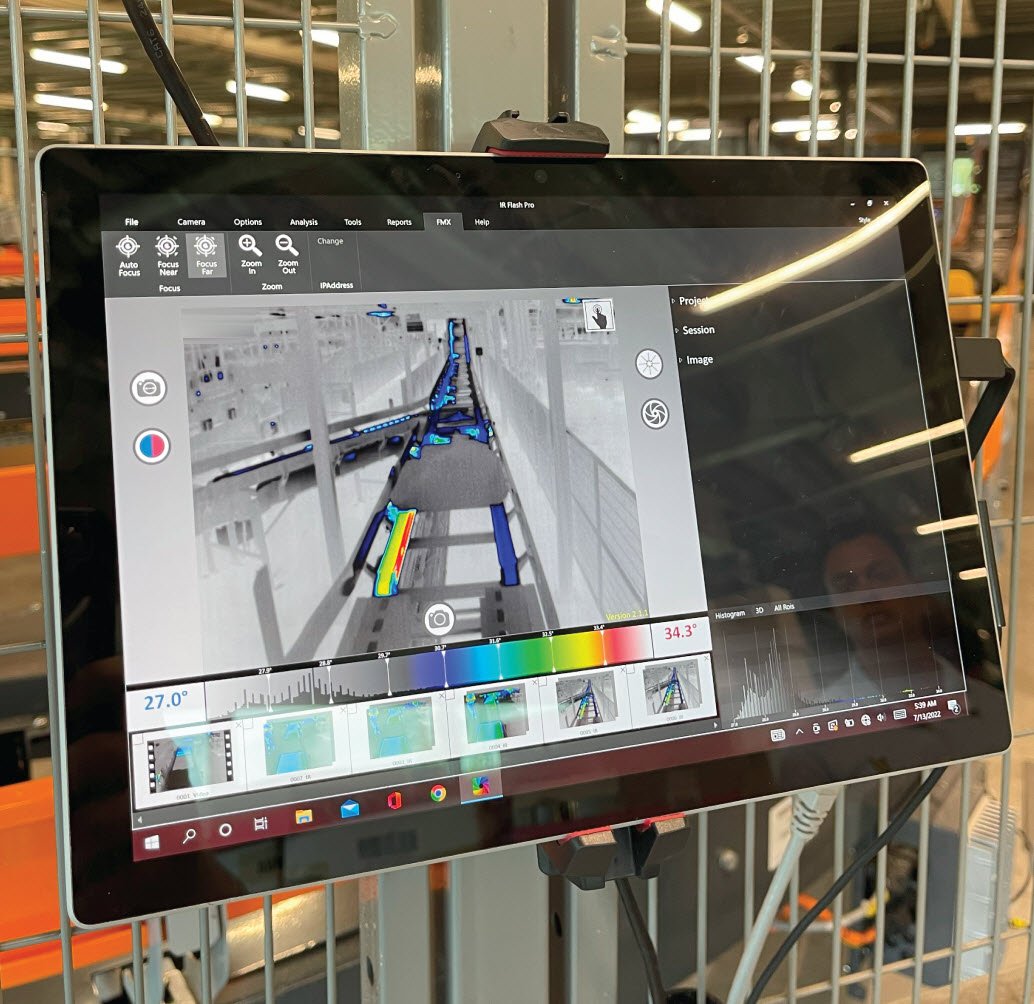

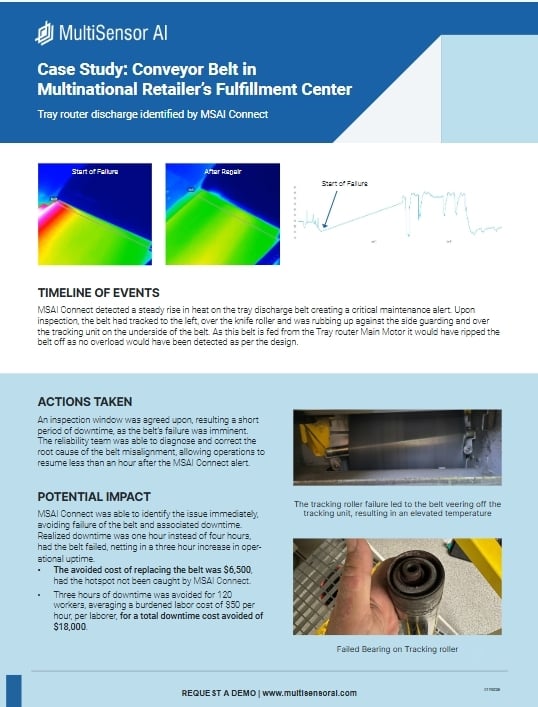

- Conveyors may exhibit subtle changes in load behavior or thermal patterns long before movement is affected

- Drives and VFDs can appear stable until operating thresholds are crossed, after which faults escalate quickly

- Electrical panels may drift slowly under normal conditions, then deteriorate rapidly during peak demand

Each of these assets can generate early signals. The difference lies in how asset-specific condition monitoring strategies capture those early changes. Detection timing only matters relative to the failure mode. Accuracy averaged across assets does not.

Why Late but Accurate Alerts Still Lead to Downtime

Many reliability programs celebrate alerts that correctly identify failures after they occur. Root cause is confirmed. The diagnosis is right. The model performs as expected.

Operationally, none of that prevents downtime which is why unplanned downtime remains unchanged even when alerts are technically correct.

If a signal arrives after:

- access windows are missed

- production schedules are already disrupted

- labor is already responding reactively

then the outcome is fixed, regardless of how accurate the alert was. Accuracy evaluated after the fact rewards explanation, not prevention. Timing determines whether a decision can still be made. This is why prediction accuracy, on its own, is a poor measure of reliability effectiveness.

What Detection Timing Actually Enables

When detection timing improves, maintenance planning shifts from reactive execution to deliberate co-ordination.”

- Dispatch maintenance before failure instead of during an outage

- Schedule work around production instead of against it

- Coordinate labor and access deliberately rather than reactively

- Apply consistent decision logic instead of one-off judgment calls

None of this requires precise failure forecasts or autonomous intervention. It requires signals that surface early enough to support human decisions.

The value is not in knowing exactly what will fail. The value is in knowing soon enough to act.

| MSAI successfully upgrades condition monitoring and predictive maintenance processes in distribution center for Fortune 50 manufacturer. |

What Early Detection Requires (and What It Doesn’t)

Early detection is often misunderstood as a volume problem. More alerts. More data. More dashboards.

In reality, it is a context problem. To act earlier, teams need to understand:

- What is changing in asset behavior or signal patterns

- How fast it is changing, including acceleration or intermittency

- Under what conditions degradation appears or intensifies

- When risk shifts from manageable to unacceptable

Without this context, alerts become background noise. With it, timing becomes actionable.

This does not require claiming exact prediction windows. It requires recognizing patterns early enough to intervene.

Why Time-Based Maintenance

Isn’t the Villain

Time-based maintenance persists because it works in stable environments. It is predictable, easy to plan, and operationally straightforward.

The issue is not that time-based schedules exist. The issue is that they are often asked to carry risk they were never designed to handle.

In variable, high-consequence operations, calendars cannot account for changing loads, environmental stress, or intermittent degradation. That is where condition-based insight matters.

Condition monitoring does not replace time-based maintenance. It supplements it where timing matters most.

Seeing Earlier Changes the Outcome

Across automated and industrial environments, a consistent pattern emerges.

Across automated and industrial environments, a consistent pattern emerges.

Most downtime is not caused by unknown failure modes. It is caused by degradation that was visible only after options were gone. When teams surface degradation earlier, the work performed often stays the same. The difference is when it happens.

Earlier signals allow maintenance to be planned instead of rushed. Late signals force response instead of choice.

That distinction defines whether reliability supports operations or reacts to them.

The Cost of Treating Accuracy as the Goal

When detection arrives too late:

- Secondary damage increases repair scope

- Downtime spreads across dependent equipment

- Labor shifts from planned work to emergency response

- Safety exposure increases under pressure

- Maintenance decisions become inconsistent and defensive

These are not failures of prediction. They are failures of timing.

A Better Question for Reliability Conversations

Prediction accuracy is easy to ask about. It is also easy to misunderstand. A more useful question is harder, but far more revealing:

Does this insight arrive early enough to change what we do?

Detection timing answers that question. Accuracy alone does not.

Turn early signals into uptime.

Book a working session with one of our condition-based monitoring experts, and we’ll review your assets, assess your maintenance maturity, and show how multi-sensor monitoring catches issues hours, days, or weeks earlier than manual rounds - giving you a clear path to fast, measurable ROI.